In this post I will describe some automated testing techniques. Testing is done to assure quality in our systems. But what is quality? Quality is that our software is reliable, predictable and well.. works as intended. Our software is often just as good as our tests of it are.

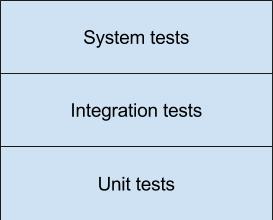

I will go through some test techniques here: unit tests, integration tests and system tests - starting with unit tests.

Unit tests

Unit tests test the smallest parts of our systems, most commonly known as a unit, a unit could be a method, class or a procedure. Unit tests are good at getting into all of the corners of our code - if the code is highly testable. Meaning that it is easy to control dependencies and input in order to trigger different scenarios (more on this later). The code also has to be observable, we need to be able verify the outcome, whether this is direct output or an event being triggered.

Since we are testing smaller units with unit tests it is easier to make sure we have a high level of test coverage. The unit under test is often not fully tested by just one test, but needs several in order to get full coverage. In our codebase our methods have many different paths and decision points. Having one test for all of this, often becomes messy as we test too much. Therefore a unit often does not only have one test, but several tests that test different paths and decisions. In object-oriented programming the lowest units are classes, and what is tested is public methods on these. I am a firm believer that private methods should not be tested.

Contrary to integration tests we test our units alone under conditions that we have control over - in isolation. Which means that anything our units depend on are abstracted away using test doubles and mocks. Access to file systems and databases are therefore also cut, instead a unit test relies on test doubles. It is important that other units do not interfere with the unit we test. Changing something in a unit should not break the tests of another. The same applies to tests. One test should not make another test break - there should be no state between tests. Which is why writing unit tests often result in a more SOLID codebase. Unit tests are also fast to execute and they test with high precision. If a unit test fails you immediately know which unit is failing - and most likely why. Whereas with integration or system tests it can be hard to tell where in the flow something went wrong or what exactly happened. Unit tests often require little investigation when they fail.

In short

- Tests the smallest parts of the system

- Stubs out dependencies

- Tests units in isolation

- Very fast to execute and precise

Integration tests

If unit tests are tests of units in isolation, then integration tests are tests of several units working together. Before we did everything we could to test our units or modules alone. Now we wish for them to work together. This is not limited to classes and procedures, but extends to file systems, databases, networks and so on. Any dependency in your code is something to integrate with. This is what makes integration testing a lot tougher to exercise than unit testing. It is simply harder to provide the environment in which your tests should run.

Some commonly known methods of integration testing are top-down and bottom-up. For bottom-up you would start by integrating your database with your DAL (Data Access Layer), then with your service layer and at last your UI (User Interface). For the top-down test you would be doing it the other way around - starting with the UI. However there is a lot of debate on how many components should be integrated at once, and when to use test doubles (stubs, fakes, mocks etc). Integration testing is often very different from company to company.

It should be said that the integration test may not get into all corners of the code. You may have a class with some methods that are never invoked in this codebase - as you may only use a subset of it's functions. That is okay, if the excess methods are not used they are not integrated - meaning integration tests would make no sense here. However they would still have to be unit tested so that they are ready for integration testing. Therefore it can be said that integration testing does not test as thoroughly as unit tests. However it tests the units together which is just as important. Two units might work perfectly in isolation - but be a disaster together.

Integration tests also test the code with filesystems, databases and network. Whatever you integrate with you need to make integration tests. Ask yourself, how often have you had an error in an integration point? Probably less if you are doing integration or system tests.

In short

- Tests modules of the system together

- Can be done on many levels

- Approaches from company to company can differ a lot.

- Often slower than unit tests but tests more broadly

System tests

System tests - as given by the name - tests the whole system together! A full top-down integration test can be considered a system test. System tests are based on the specification for the system, which today most likely is a user story or a group of these (an epic perhaps). With the rise of agile thorough documented specifications are rare and we are more focused on who our users are and what they want to do (scenarios).

System testing has many subcategories. It is not just about the functional requirements, but also the non-functional. Such as response times, uptimes or usability. Below is a list of different test approaches that can be used in a System test, some may be applicable and some may not for your current system.

- Functional testing: Tests the system as according to the specification. This is a lot like integration testing - but with the whole system. Functional testing verifies that all functions are in place and works as expected.

- Usability testing: Tests that the user interface is as according to the specification. This can also include non-functional requirements such as user experience design.

- Soak test: Tests how a system operates over a longer period of time (production use). Systems use resources and the soak test shows whether it let's go of these or not. Examples of this may be memory leaks or connections that are never closed.

- Load test: Used to test if the system can handle the usual load it will have once in production. The test is done both for peak hours and regular hours to see how it performs and perhaps degrades.

- Stress test: Stress test is like a load test. However here the aim is to find the limit. The result will be a broken system - and found limits.

- Security test: An assessment of the security of the system. Tests whether there are any flaws in the security of the system.

- Exception test: Tests how exceptions and errors in the system are handled. This is the scenario that is often not tested. We have a tendency to only test the sunshine scenarios. Exception testing makes sure that we test our system when it fails - and that our error handling works as intended.

These are just some types of system tests. There are many other types and I have not found a full list of these. Wikipedia has a good list, but looking around the web you will find even more types of system tests.

Many of the above are hard to automate, but many are doable. I have written many selenium tests during my time as a software developer. My experience with these is that they give great value - if they are maintained. But the smallest changes can break your tests and it may take you a long time to figure out where/when the error was introduced, because you have a whole system to go through.

In short

- Tests the whole system

- Has many different approaches

- Depends on requirements from a specification (User stories)

- Can have both functional and non-functional requirements

- Tests very broadly

Summary

You may wonder where UAT (User acceptance test) was on my list. My list was intended for testing levels that can be automated. A UAT is where the user comes in and is therefore not meant to be automated. But after the System test comes the UAT.

All 3 types of tests are important. I often hear companies having automated unit tests and doing some sort of system tests manually. I believe most start at manual testing of some sort, then get into unit testing, or perhaps system tests. This I believe, is due to it requiring a smaller investment up front. Unit tests are easy to get started with as they are run in isolation. They also execute fast giving you swift feedback.

Integration tests require a lot more to be automated, but also give great value. Testing your system end to end automatically let's you know if you break something. You may not know exactly where the issue is at first but knowing something is off is the most important. I often find errors in integration points - almost at least as often, as I find errors that could be caught by a unit test.

All 3 levels of testing support each other well. You go from testing very little and specific (unit tests) to everything and very broad (System test).

Depending on your timeframe and development style you may have more or fewer of the different types of tests. If you do TDD then you will have many unit tests. If you have a distributed system you will probably have many integration and system tests. Often the number of unit tests outweigh the number of integration and system tests, as there is naturally more to test with unit tests (every method/procedure in the system).

Remember that tests are an investment in quality. Where and how you apply this in your system is up to you - as all systems are different.

Please leave a comment down below if you found this helpful or have something to add :)